Write it like Riak. Cache it like Redis.

Some Big Data applications require caching to meet their application performance needs. Redis provides superior caching with the ability to fine-tune cache contents and durability. Riak KV Enterprise, with the Redis database integration, reduces latency to improve performance and meet your application’s requirements.

CACHING IMPROVES APPLICATION PERFORMANCE

Redis caching with Riak KV Enterprise improves application performance. The high availability, scalability, data synchronization, and automatic data sharding of Riak KV makes Redis enterprise-grade.

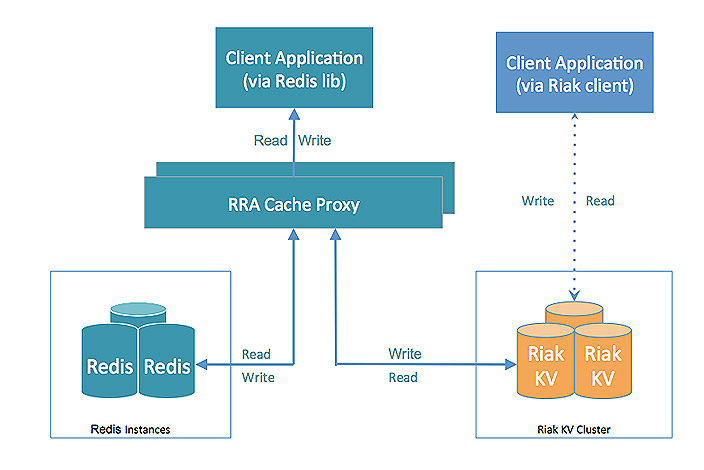

In Riak KV, the Cache Proxy provides pre-sharded and connection aggregation, which reduces latency and increases addressable cache memory space with lower cost hardware. The Cache Proxy increases performance by pipelining requests to Redis. While pipelining can be performed at the client, the Cache Proxy on the server is ideal due to connection aggregation. Pipelining reduces network roundtrips to Redis and lowers CPU usage on Redis.

Since any Redis client can query the cache, no changes are required for existing Redis clients to access data in Riak KV. If Redis doesn’t have the data in cache, it is accessed from Riak KV. Data is also automatically synchronized between Redis and Riak KV, increasing availability by allowing read-failures in Redis to be resolved by Riak KV and written back to the Redis cache.

Deploying Redis caching with Riak KV is as simple as specifying where the code should be deployed. Both static (configuration) and dynamic information (port numbers, etc.) are managed at the time of installation for both newly deployed instances and existing Redis installations.

Some of the key functionality of the Redis Add-on include:

- Pre-sharding

- Connection Aggregation

- Command Pipelining

- Read-through Cache

- Write-around Cache

BENEFITS OF REDIS INTEGRATION IN RIAK KV

Sometimes Big Data applications need caching for better performance. Riak KV Enterprise with Redis caching reduces latency giving your customers a better user experience.

Increase performance and scale

When your Big Data application has to be optimized for milliseconds, you need the speed of Redis combined with the power of Riak KV. The Cache Proxy increases performance by pipelining requests to Redis. Pipelining reduces network roundtrips to Redis and lowers CPU usage on Redis to increase overall application performance.

Reduce costs

Managing the costs of Big Data applications is critical to your business. In the Redis integration with Riak KV, the Cache Proxy provides pre-sharding and connection aggregation as a service. This reduces latency and increases addressable cache memory space with lower cost hardware.

Get to market faster

In the highly competitive Big Data market, faster application development can give you a jump on the competition. Riak KV integration with Redis caching provides automatic sharding to eliminate labor-intensive and error-prone manual sharding. Also, the Cache Proxy eliminates the caching client code. This cache integration reduces the burden on your developers, helping you to get to market faster.